Data Quality: Can Programmatic Quality Checks Outperform Manual Reviews?

By Tim McCarthy, Imperium General Manager

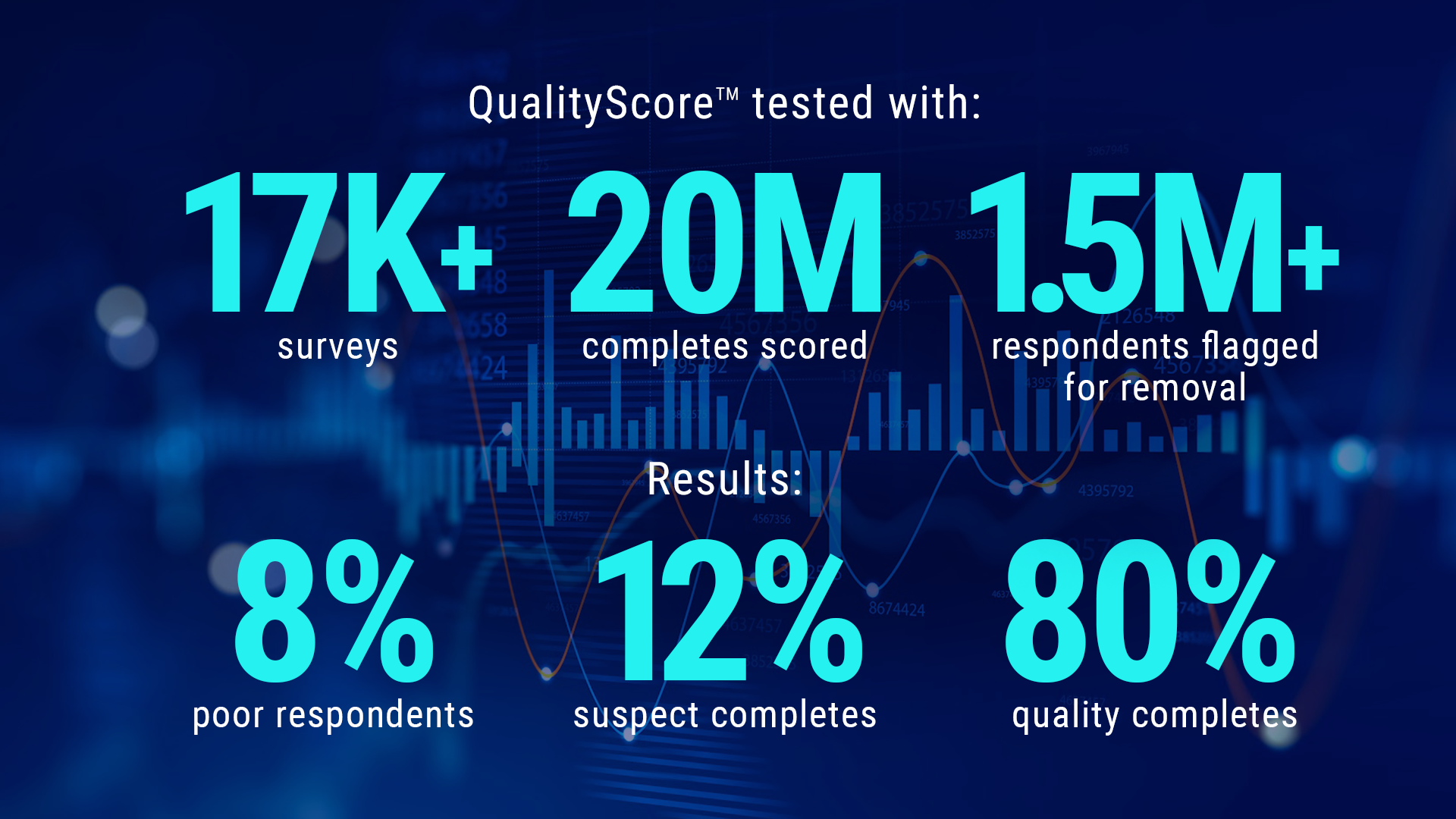

We launched our new automated tool, QualityScore, in 2021, to help streamline in-survey data quality checks, at the same time delivering significant cost and time savings.

As we analyzed the data from over 17K surveys (with 20m completes scored and 1.5m+ respondents flagged for removal), we expected to see automated outputs mirroring manual removals. Instead, we learned something far more interesting about the nature of the overlap between automated and manual removals.

We found that the automated checks, while flagging roughly similar numbers of respondents for removal, were, in fact, returning different groups from those identified via the manual process. The average overlap between automated and manual removals was just 50-60%.

Initially, this seemed like a worrying development. Were we checking for the right factors? Was our ML model operating correctly? Had we trained it with the right data?

The overlap isn’t a glitch – far from it. The fact that automated and manual checks are returning somewhat different results is actually a very good thing – and here’s why.

While manual checks can be effective in identifying clearly fraudulent respondents, they’re also more likely to miss more sophisticated cheaters – the ones who know not to fall foul of the standard speeder checks, the ones who aren’t straightlining at grids, the ones who provide seemingly accurate OEs. At times, to the naked eye, these could appear to be among the best respondents in the survey. Our automated checks are calibrated to catch these cheaters by utilizing passive and behavioral data (i.e. survey taking acceleration, copy/pasted OEs, repeat OEs across different respondents, mouse movement, etc).

Also, while our research demonstrates cheaters don’t all cheat in the same way it also demonstrates that good respondents don’t fall neatly into a homogenous data subset.

Good respondents don’t necessarily exhibit uniformly good behaviors – and if you base your removals on a single factor, you’ll risk biasing your data. For example, removing respondents on the results of speeding checks alone may unfairly affect younger age groups who generally complete surveys more quickly. A recent report, created through QualityScore activity, showed that respondents in the 18-34 age category failed standard speeding checks twice as often as respondents aged 45+.

Manual checks are useful, but a more automated respondent-scoring process delivers greater objectivity and increases the likelihood of engaging with the most appropriate respondents. Manual checks often cast a large net to make sure they catch the highest number of poor respondents. However, they frequently return a high false-positive rate (real respondents who may have unwittingly tripped one or two flags); we see this a lot when a respondent submits one relatively poor or unengaged OE response.

We know that the broad-brush approach that’s often a feature of manual checks, isn’t deployed because it’s necessarily the best method, but because reviewing all data points in unison for every respondent would simply be too time consuming. By removing respondents who trip flags – even for minor infractions – you will likely catch all the bad ones but you’ll also risk excluding many who are real, somewhat imperfect, respondents. Only by reviewing all key data points together can you confidently identify the difference between an imperfect real person with mostly good data, and a flat-out cheater.

Because our automation also flags respondents who stray from the standard response pattern for that specific survey, it also counters any bias that’s down to the quirks of survey design – if 90% of respondents are straight lining at one question, for example, maybe it’s a fault in the survey rather than an indication of wholesale cheating.

On average, QualityScore flags about 8% of respondents as ‘bad’, with an accuracy rate of 99% in field tests (confirmed through manual checks).

Data quality is a moving target. Which means that when it comes to tech, you can’t ‘set it and forget it’. Relying on increasingly out-of-date intelligence simply won’t work when even less sophisticated cheaters are becoming smarter as they realize they are getting flagged/ removed.

As a rule, real-time automated tools give researchers much greater control over the QA process than manual checks, without committing additional time and resources. Research companies using our fully automated solution save about 85%+ of the time they would otherwise spend checking survey results to identify bad respondents. Moreover, using ML to learn from respondents – good and bad – helps to refine our data-quality solutions so they become more intelligent and more responsive. Standing still simply isn’t an option.

Tim McCarthy is General Manager at Imperium. He has over 15 years of experience managing market research and data-collection services and is an expert in survey programming software, data analysis and data quality. Imperium is the foremost provider of technology services and customized solutions to panel and survey organizations, verifying personal information and restricting fraudulent online activities.